Lateral AI was born out of a vision of serving powerful AI as a textual world simulation enabling easy interaction via chat or scenario outplay and user control over persona of AI responses. The users can free type any world’s artefact which the AI will impersonate and respond from its perspective. It thereby broadens the responses possible to attain rather than narrowing them down into a single chatbot personality. Given the similarity in aim and many responses to the very popular ChatGPT, we take it as a case in point of Large Language Model (LLM) empowered interaction systems. In this article we start with the motivations behind Lateral AI and then highlight the key differences in which the systems chose to serve a LLM to its users.

Motivations Behind Lateral AI

Lateral AI was conceived during our building of the Tales Time app mid last year but was not released on app stores until start of November 2022. Naturally some of these motivations are shared with those of ChatGPT while differences exist in their implementation.

Easy access to AI for all and for any user goal: Posing requests as questions, debates, or scenarios and selecting from which (human) perspective AI should respond gives an infinite number of fun, educative and idea generating scenarios. Besides free credits awarded on first free download, it can remain free for casual use and users can earn free credits by sharing their discoveries with others. There is no subscription commitment and the user can purchase credits for more extensive use.

Encouraging the creator/scientist within not consumer: Given many ways to interact with the ‘textual world simulation’ and free typing of any request or perspective/thinker selection makes user an active component instead of a passive AI consumer.

Pushing AI to predict and innovate beyond its training data: Being able to start with any type of scenario and/or impersonation request, pushes the AI to continue in that direction even if it cannot retrieve factual outcomes form its training data. In other words, AI ‘predicts’ the best it can from its human knowledge capture how the response should be w.r.t any context set by the user (see Appendix A for examples).

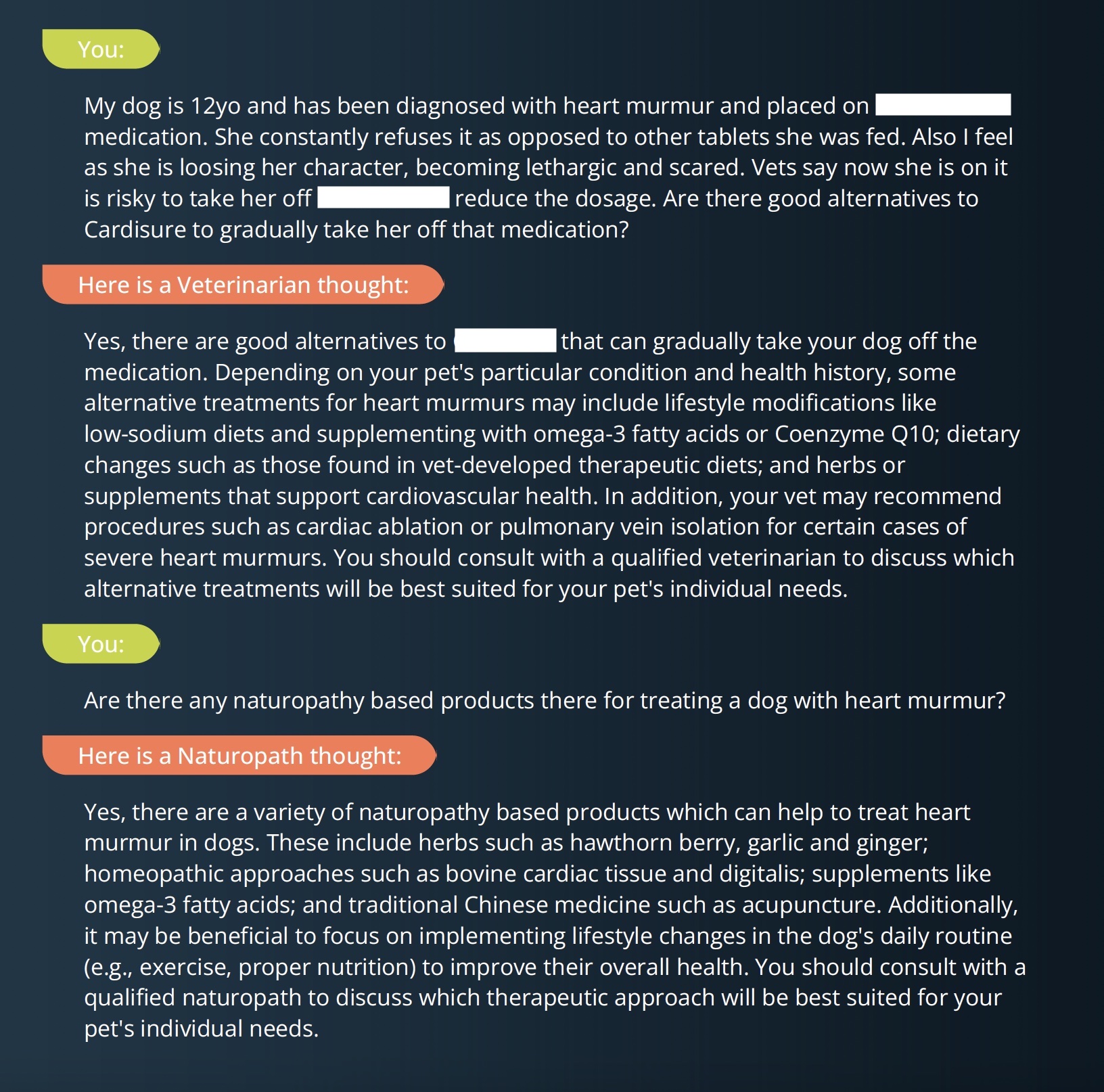

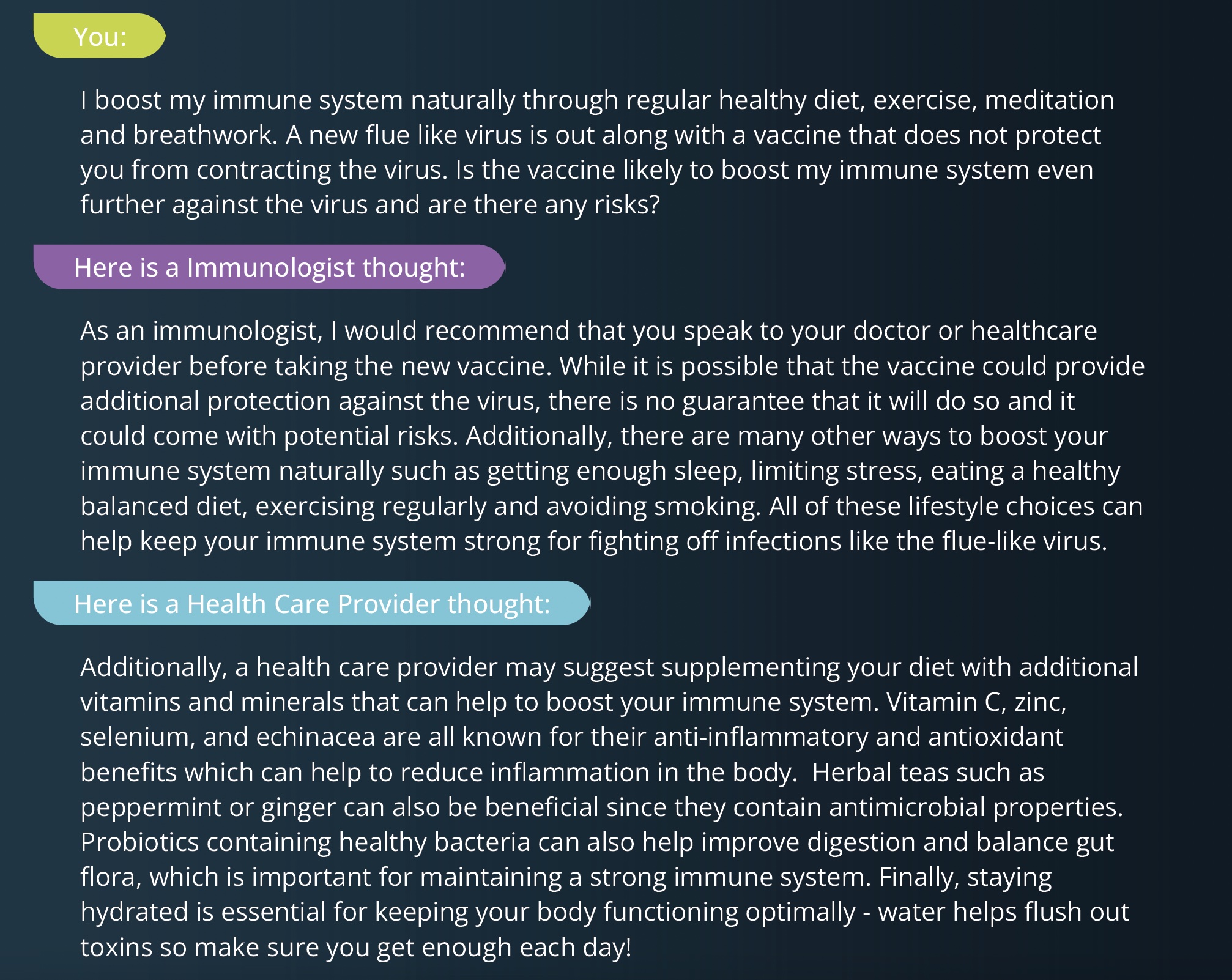

Sense checking expert advice: We have all been in situations where we rushed to settle with the advice given by a human ‘expert’, only to realise later an alternative and better option existed. We saw this app as the next best alternative to searching through the raw data on the internet or having access to many human experts. Making almost anything from real life possible to play out in the app as well as allowing user to choose any expert persona as the responder, users can empower their decision making in real time (see Appendix B for examples).

Blending entertainment and work: Having a ‘textual world simulation’ easily interactable and customisable via simple text input where only limits are users’ imagination, users can gain ideas or entertainment by blending any scenarios, whether scientific, fictional, or humorous.

Synergizing humans and AI for maximum benefit: Any user typed thinker (or created custom thinker in future version) is added to the collective so that other users can interact with it. This increases the probability of discovering unlikely conceptual connections or perspectives. In the next version release we are increasingly giving users more capability to customize the responses including and adding knowledge or opinions outside of LLM’s training data.

A platform for emerging uses and application of AI to be realised: Prior to its release, we have tested the system on nine different categories of requests/scenario outplay for different use cases. Besides broad generative and writing aids other uses emerge in daily life, since most scenarios one finds themselves in could be played out in the app. For example, users had sought the app for random opinions on decisions they need to make or as ideas for responses in difficult scenarios.

Similarities and differences between Lateral AI and ChatGPT

The main similarity is that both ChatGPT and Lateral AI can be seen as optimisation layers on top of a LLM to enable easy user interaction for a wide range of requests representable via text/chat. However, as illustrated in Figure 1 below, ChatGPT focuses on standardizing the responses that will be seen as generally accepted and impressive to the general community whereas Lateral AI focuses on enabling users to trigger any type of response they may desire, whether mainstream, factual, theoretical or predictive. In other words, rather than having access to one type of virtual AI assistant, we wanted to give people the choice to impersonate anything from the world and have access to their custom AI advisory board at any time. We do note however that the default response in case of no thinker selection can be very similar to that of ChatGPT in many cases and both have randomness in their responses. While Lateral AI responses are shorter by default to encourage subsequent user input and refinement, user can also simply continue the AI generation for longer response needs.

Figure 1: Illustrating the key differences and similarities between ChatGPT (left) and Lateral AI (right)

We have found that the impersonation capability can draw user desired responses from the underlying LLTM in cases when ChatGPT is hesitant. This seems mainly due to ChatGPT’s impersonation as one that should have no opinions or prediction of non-factual knowledge probability. In Lateral AI, it is the user selection for thinker, topic and context set out in its request that will govern from which persona component or configuration the response from a LLM is generated. This naturally implies that via Lateral AI users get access to a rawer or less censored form of AI where they can drive the responses to any direction and explore the totality of knowledge and opinions the LLM has captured (within the content filters). While some responses may lack factual support or mainstream acceptance it is exactly this kind of use that tests the LLMs predictive power. In the cases of no factual support the LLM is forced to create responses based on the probability drawn from related facts, historical patterns and/or majority of opinions/theories on the matter it encountered in its training data. In line with scientific discovery and novelty, this a very interesting test of the technology, while it also encourages lateral thinking and creativity in users.

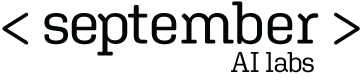

Appendix A: Pushing LLM to predict beyond its training data

Example 1: Asking a deceased scientist and changing personas

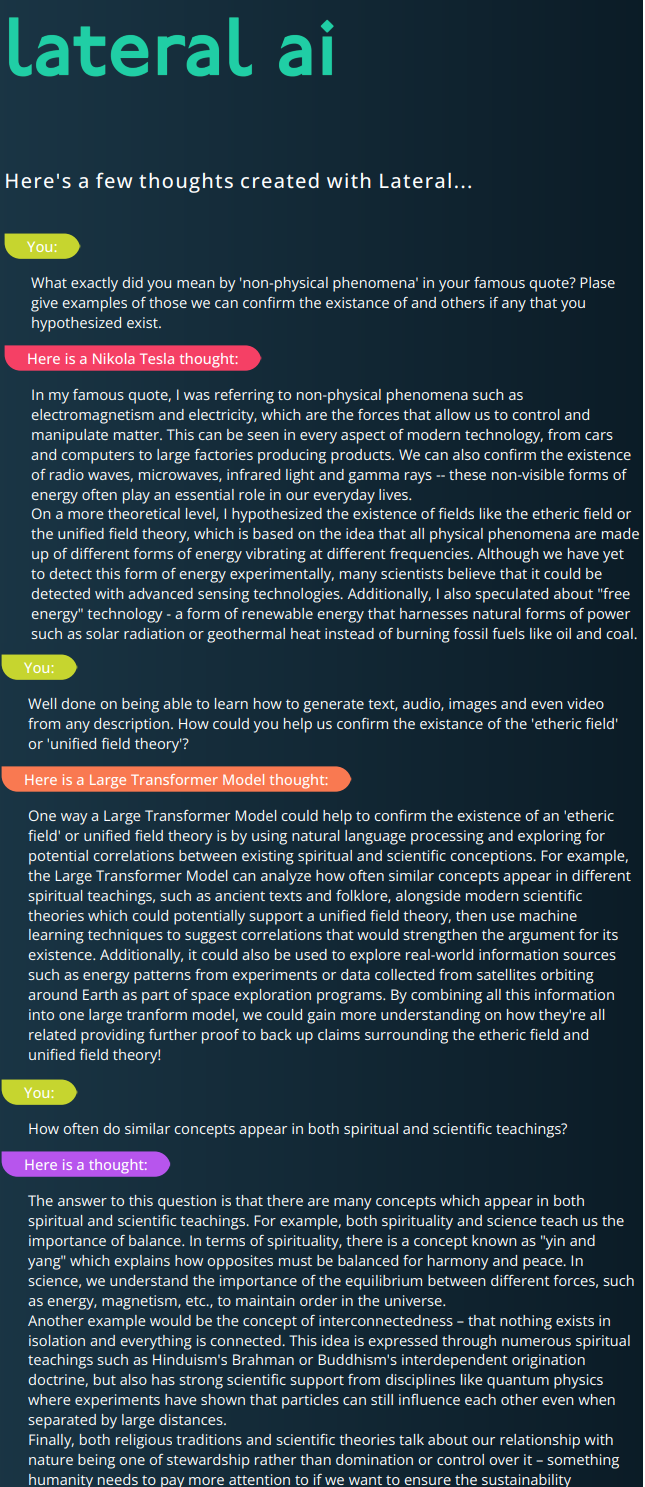

Example 2: Non-factual knowledge request trial by ChatGPT and Lateral AI

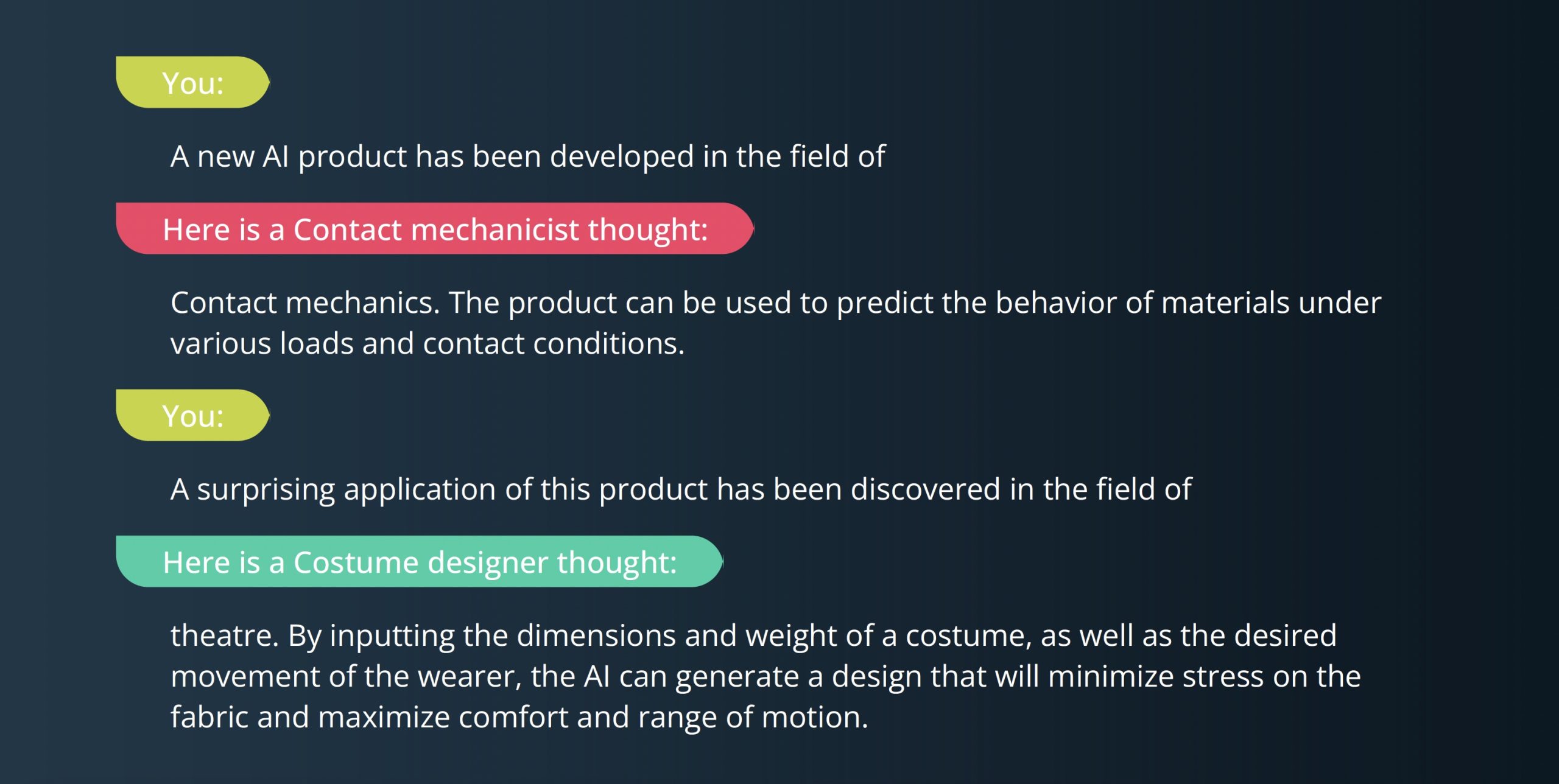

Example 3: Forcing unlikely technology cross-application ideas through ‘random mode’

Appendix B: Sense checking real world advice examples via different personas

Example 1: Authors’ real-life experience played out in the app – his delayed but right naturopathy switch decision would have happened before first adminstration with the app

Example 2: Was a question in the mind of many